Turn on your favorite streaming service or scroll through social media, and you’ll probably stumble across something AI-generated. There are AI-generated podcasts, professional headshot generators, meme creators, and, of course, AI music and image generators of all kinds.

In fact, I’d bet you didn’t even realize just how much it has already snuck into the mainstream, including your favorite shows and services.

The Pervasiveness of AI Music

When I say that AI music is everywhere you look, I am not exaggerating. Streaming services like Spotify are not only using AI playlist tools to help create “personalized” soundtracks, but they also allow and even feature AI-created tracks. This is allowed only if they do not break copyright law by imitating other artists or stealing lyrics, meaning you likely have already come across such songs. On social media platforms like TikTok and Instagram, AI-generated music is often used in video content to provide unique, catchy, copyright-free soundtracks for user engagement.

Even the advertising industry is embracing AI to create soundtracks, customize jingles, and create simple backing tracks at low costs. It’s also being used to compose, generate ideas, and improve sound design for movie and television scores. Overall, the idea is to make things easier for the creator, and people are embracing the ability (and cost-effectiveness) of the droves, but is it a good idea in the long term?

The Good and the Ugly of AI Music

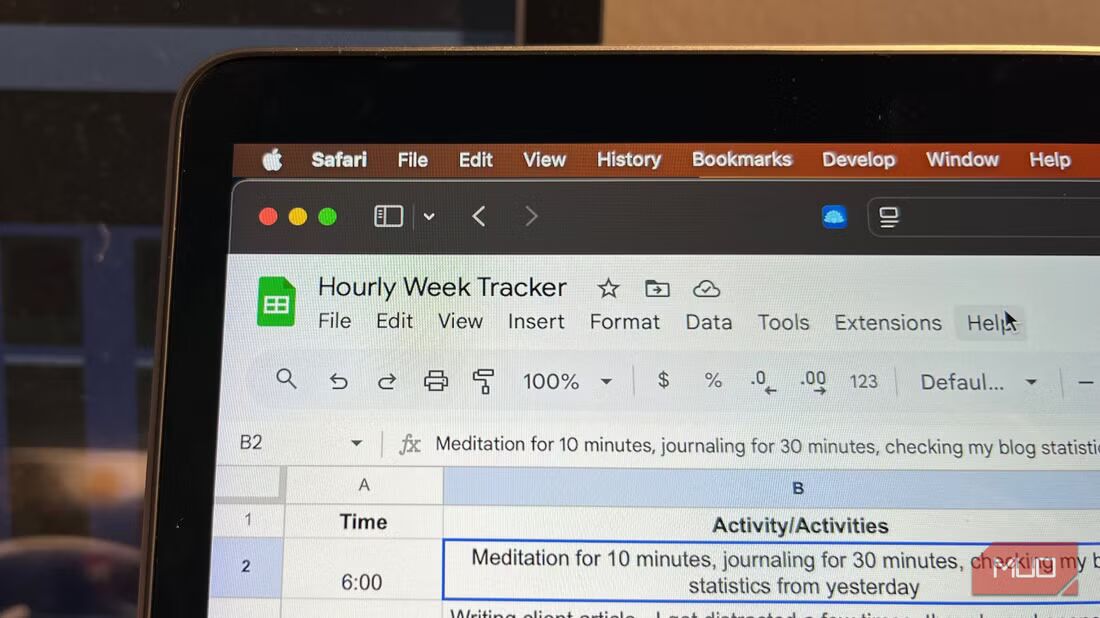

On one hand, AI music can offer some considerable advantages. For musicians just starting or independent creators with limited budgets, AI tools can lower barriers to entry by offering cost-effective solutions to composition and production challenges. These tools can generate musical compositions that would have otherwise required a suite of expensive instruments, hiring a band, or needing studio time.

AI can also help artists explore new musical avenues, letting them generate new and unexpected sound combinations. They don’t have to be restricted by the traditional genres or instruments; instead, they can experiment with different algorithms and discover brand-new creative horizons, all at the push of a few buttons.

Yet, despite these benefits, there are also some big downsides. The loss of the “human touch” and originality can’t be ignored. Nor can the idea that AI-created music isn’t actually creative; it is just an algorithm spitting out information.

In this sense, it is very much like the analog versus digital debate, which is more “true” to the original artists’ intent. AI music struggles to capture the nuances and imperfections that make human-made music connect with listeners on a deeper level and evoke real emotions within us.

There is also the issue of sounding too much like, or even straight-up copying, “real” artists. We saw this just last year when a TikToker named Ghostwriter977 generated an entire album featuring popular artists TheWeeknd and Drake. The album gained massive popularity, but was quickly taken down for copyright violations. This will only happen more often, and traditional artists will have to stand up for their creative rights because of it.

Music Without Emotion

Another downside is that while AI music can sound good, the complexity of sound and emotion is something that AI just can’t quite grasp. This is true whether AI is being used to create new music or as a stand-in for other artists.

Take, for instance, how AI has been used to mimic the signature vocals of artists like Linkin Park’s Chester Bennington. I must admit that, as a fan devastated at his passing, it would be good to hear him again. Unfortunately, while it can be fun and nostalgic to hear other songs in his signature style, the results come across as jarring and inauthentic. Our ears are finely attuned to the expressive power of music, hearing the excitement, pain, or anger behind every note, which isn’t something AI can replicate.

At least, not yet (and hopefully not ever).

AI Doesn’t Sound as Good, and You Can Tell the Difference

While I can see the benefits of AI, I hate hearing it played out loud. To me, it sounds inauthentic, and as soon as I notice it, that is all I can pay attention to. It’s like noticing the incorrect details in AI “art.” Something is always wrong, like too many fingers or lines that go nowhere, and AI music is the same. Lyrics might sound ‘alright’ at first, but something is lacking. Or the tones of the music and vocals technically work, but the emotion behind them just isn’t there. Plus, while the idea of being able to just ‘make’ music by plugging ideas into an AI algorithm might appeal to some, I believe it should never override the real creativity of human-generated music.

This means that finding a balance between AI’s benefits, i.e., its ease of use and accessibility, and its drawbacks will be a major question mark in the future. And to make sure AI is used correctly without hurting artists, we are going to need push-back from listeners everywhere.